Yakov and Partners and AI Alliance Russia reviewed domestic and global practices in Generative Artificial Intelligence (GenAI) regulation and found that Russia offers one of the most favorable environments for GenAI development. According to the study, the risks stemming from the development of this technology are mostly related to responsible attitude of developers and users to generated content.

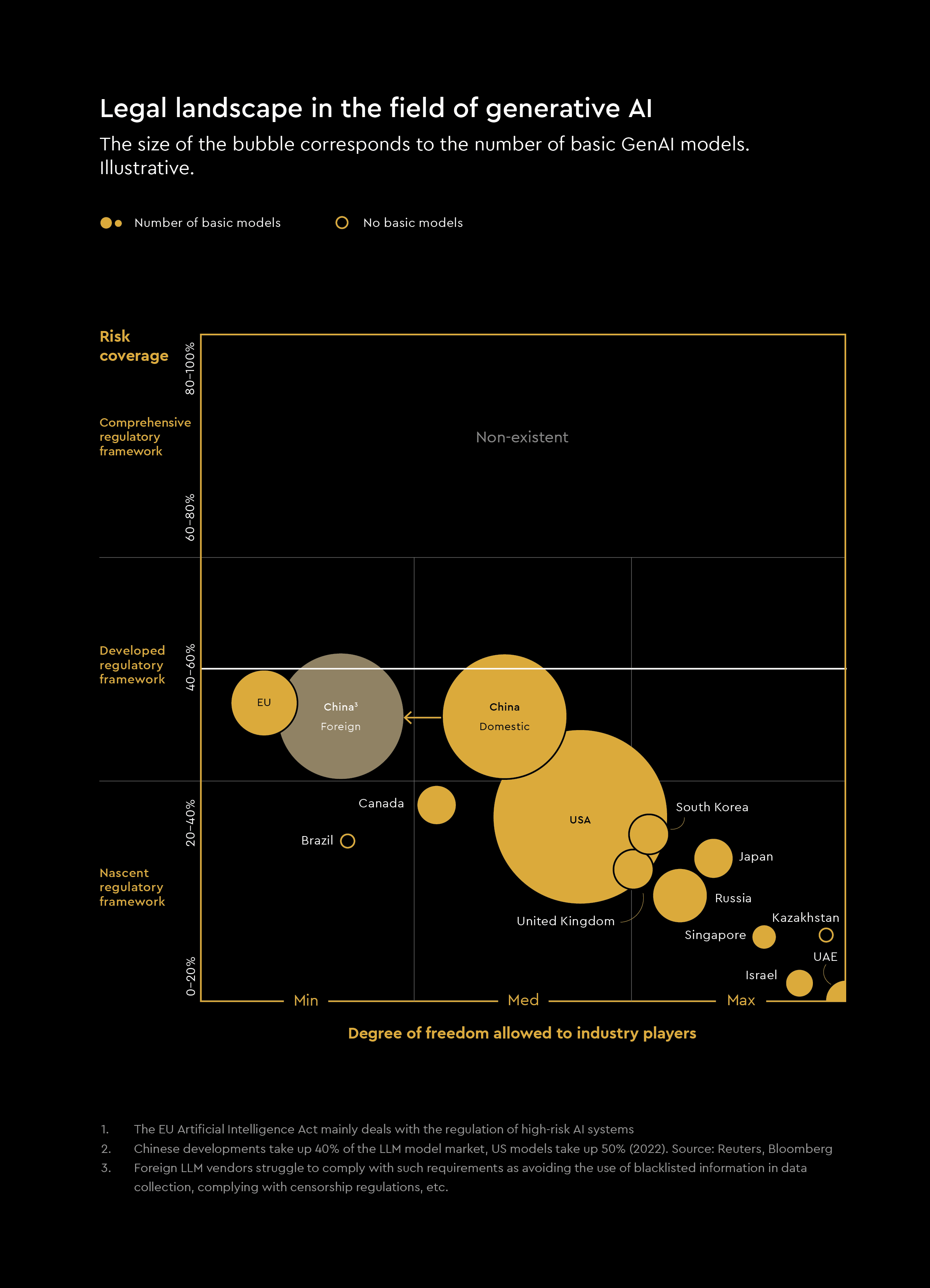

Analysts from Yakov and Partners and AI Alliance Russia identified the most favorable countries for the development of AI technologies and categorized them into several groups based on the maturity of the local regulatory framework and the degree of freedom allowed to AI developers. The group of leaders with the most favorable environments which spur the development of AI and inflow of investments included the US, Israel, Singapore, the UAE, and Russia. The second group is represented by China, which uses two models simultaneously, creating favorable conditions for domestic developers and entrance barriers for foreign suppliers. The third group includes the EU, Canada, and Brazil. They aim to minimize potential risks associated with implementation and use of this technology, which creates substantial barriers for GenAI development.

The study found that no current legal and regulatory framework in any country covers the entire lifecycle of a GenAI-based service, from model development and training to output application. In the absence of universally established approaches to risk mitigation, each country incrementally introduces and tests requirements for those stages they consider risky.

In addition, the study looked at some GenAI incidents from all over the globe and identified 5 risks of GenAI most relevant for Russia. Those include a surge in low-quality content generated by GenAI; potential harm caused be misleading GenAI responses; negative impact on the labor market; digital fraud; and ethical violations. Analysts emphasize that the majority of those risks can be minimized by labelling generative content (including hidden watermarks), creating services to detect AI-generated content, and responsible use of AI. In 2023, seven foreign AI players (Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI) committed to secure and trustworthy AI development and implementation. The list of their commitments includes, among other things, labeling of generated content and safety testing of developed solutions.

Russian industry players also commit to self-regulation. In 2021, members of AI Alliance Russia pledged to abide by the AI Code of Ethics, which was enriched with the Declaration on Responsible Development and Use of Generative AI in 2024.

Among other things, minimizing the risks requires improving digital and legal literacy of the population, e.g. alerting them to the risks and opportunities of generative AI, specifics of and liability for the use of this technology. Users should also treat any AI-generated content critically.

Taking into account the experience of foreign countries and the pace of AI development in Russia, implementation of self-regulation mechanisms seems to be the optimal solution for AI regulation. It allows maintaining a balance between tight regulation and space for technology development. This approach should include constant monitoring of GenAI development in this country and setting up a robust user feedback loop. This will serve to improve the quality of GenAI systems and enhance the legal basis for their functioning. At the first stage, changes introduced based on the new information can be reflected through amendments to the Russian Declaration on Responsible Development and Use of Generative AI.

The mechanisms tested under the self-regulatory regime could later form the basis for developing experimental GenAI legal regimes.